Outcome-based pricing is NOT the future

Pricing will change, but not like that

When AI first took off, Benchmark’s Sarah Tavel wrote “Sell work, not software”. She outlined why AI will change how we pay for software:

The first tranche of products and startups leveraging LLMs has kept within the mental model of selling software to achieve step-function improvements in end-user productivity. The "Copilot for [x]" trend reflects this mental model. While there are fantastic startups innovating to improve employee productivity, LLMs create an opportunity for startups to look beyond this way of thinking and discover surface area that previously was out of bounds for selling software given the required GTM and pricing limitations of software. To do this, rather than sell software to improve an end-user's productivity, founders should consider what it would look like to sell the work itself.

We’ve seen this before. When buying software meant buying a license to run something on your own servers (and the obligation for uptime, performance and upgrades), you bought the tool itself and would mold it to your purposes.

The most successful software companies built databases, CRMs, servers, ERP, etc.—all categories that require customization. Software meant buying raw materials you had to make usable.

Companies’ documents would be created using an office suite and hosted on a server with a database to ensure everyone has access to the docs they need. And we haven’t even mentioned that you’d have admins maintaining all of that stuff!

SaaS turned folded all of that into Notion.

In every category, we can have pre-customized software to niche use cases. This was unthinkable before the cloud, mainly because most people didn’t want to deal with the IT infrastructure of hosting their owns servers, customizing and maintaining software.

So what we call “the SaaS revolution” is actually two phenomena:

Cloud-hosted software that’s continually updated and can be accessed anywhere.

Subscription pricing on a regular basis.

Subscription pricing logically follows from cloud hosting because it transfers the ongoing cost of hosting from customer to vendor. And one-time revenue for ongoing costs is a bad idea, so subscriptions make the customer cover that cost.

Product revolutions require business model revolutions. AI is another product revolution on (at least) the scale of SaaS. But while SaaS was about helping workers do their work better, AI can do the (part of) what the worker would’ve done.

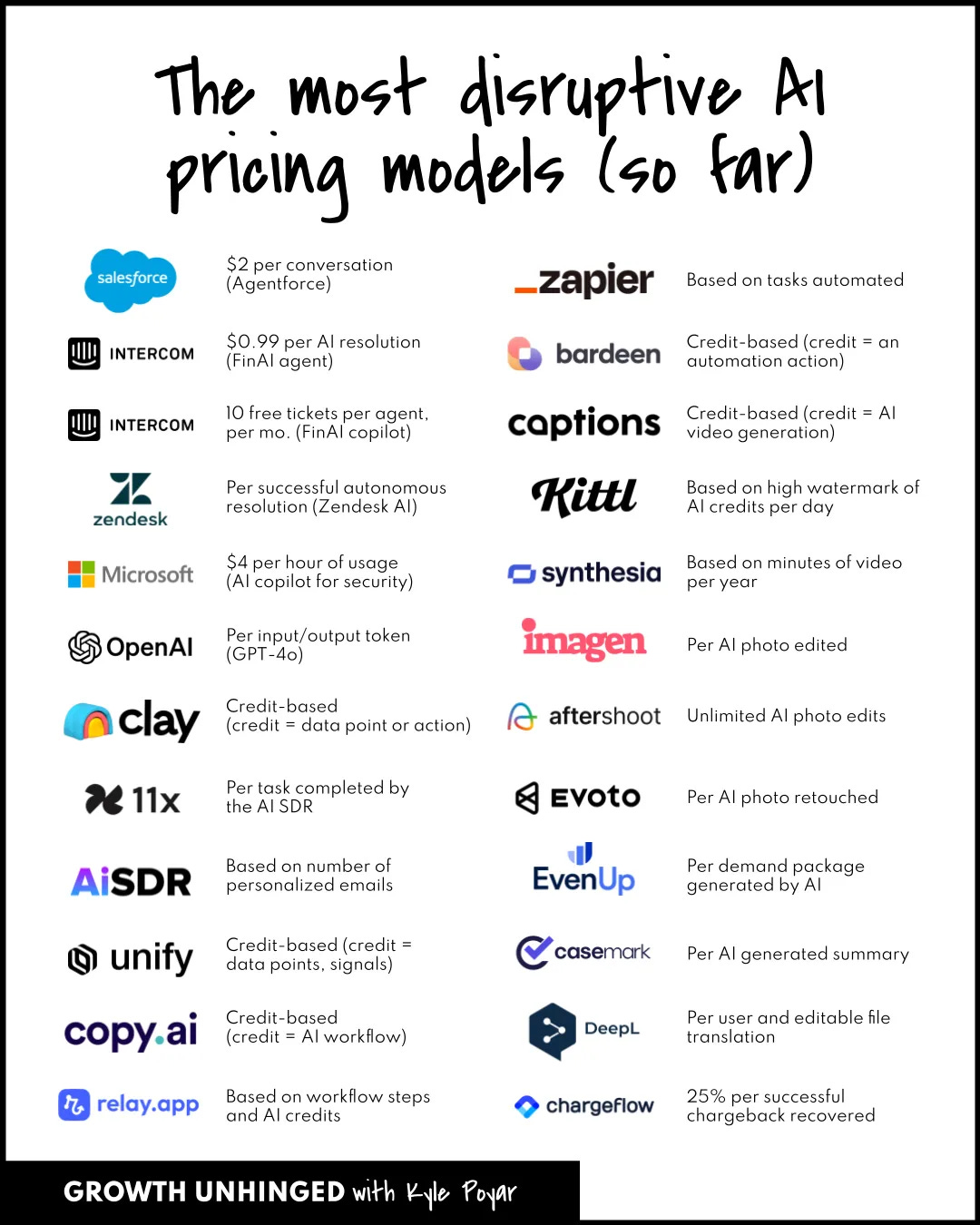

If the SaaS revolution is any guide, AI will force another monetization revolution. Just look at all of this experimentation (credit goes to

):Companies are doing this because pure-play usage-based pricing for AI has a margin problem: If you just add x% to your OpenAI costs, you’re incentivized to maximize consumption, which is rarely what benefits anyone.

Think of an AI-powered support chatbot incentivized to write the longest response, not the most useful one.

Because AI needs a native pricing model, there’s a new variant of usage-based pricing: Like anything new, people call it “the future” and predict it’ll be ubiquitous. But will outcome-based pricing dominate like per-seat pricing did?

If you’re not familiar, the idea is to charge customers when your (AI) product gets them a specified outcome. Intercom’s Fin support agent is a perfect example: Instead of a monthly fee, it charges $0.99 “per resolution”—support chats that didn’t result in a ticket.

This aligns the incentives more (customers only pay for results) and bolsters Intercom’s margins because I guarantee they’re not using half a million tokens (250k words ish), which is roughly what a dollar gets you on the GPT 4o API.

So have we found the ideal pricing strategy for AI (agents) moving forward? I don’t think so. I believe it’ll remain a niche pricing strategy.

Let’s explore why I believe that, where outcome-based pricing will work and where it won’t.

Most jobs aren’t paid a commission

Outcome-based pricing is basically paying (non-human) workers a commission. But most tasks in companies aren’t paid by commission.

That’s because it’s extremely hard to create commission incentives for most professions.

Where commission works is when the value of your work is easy to calculate. Real estate brokers get a percentage of the sale because you can directly measure the value created.

Salespeople, brokers and agents are commonly paid commissions. Most other professions are too abstract or qualitatively different for this to make sense.

They’re impossible to measure. Think of HR, which creates outcomes too abstract to pay by the outcome.

The outcomes vary qualitatively. You can measure how many PRs an engineer has submitted, but you don’t know if a PR is a tricky new feature or a minor UI bug fix.

The outcome shouldn’t be maximized. You wouldn’t pay your social media marketer by the like because of the content they would post.

The outcomes are too rare for a human to sustain themselves (and their family). A PM could get paid on the outcome of a successful feature launch, but their life would become precarious.

AI tooling mirrors this. Imagine a perfect HR AI model that obviates every HR professional. What outcome would you pay it for? A subscription makes more sense, the same way a salary makes more sense for human HR.

Outcome-based pricing makes sense where humans get paid commissions as long as we think of AI agents as doing the work of humans. But that doesn’t need to be the limitation because AI doesn’t have rent to pay nor a family to feed and doesn’t get tired.

So even if it doesn’t make sense for a human to be paid on commission, AI could do that job by the outcome.

Intercom Fin is in that sweet spot. Customer support is normally not paid by the ticket, but Intercom’s Fin is. Support is a cost center that resolves x tickets for y dollars and if you divide y by x you get the cost per ticket, the outcome is measurable.

These will be the goldilocks outcome-based products: Where humans wouldn’t be paid a commission for, but create easy-to-measure outcomes.

But even if you have that, can you even measure it?

Attribution is hard

Intercom could launch outcome-based pricing because they already own the help desk. They know when chats are opened, when tickets are submitted, what customers write and what support agents write and when cases are resolved.

Most companies don’t have that luxury. Outcome-based pricing requires deep access.

It’s hard to attribute even if you have all the data: If a user rolls their eyes, thinks “what a useless AI chatbot” and slams their laptop shut in anger, they didn’t submit a ticket. Does the system count that as a resolution?

Outcome-based pricing requires finity

Intercom’s outcome-based pricing works because support tickets are limited. You know that if you get 1000 customer chats a month, you’ll pay a maximum of $990.

That’s not true for all AI agents. A proactive, outcome-based AI SDR might get 500 meetings in a week, but if you only have 2 salespeople, that counter-productive. You’re paying for a ton of leads you can’t handle (or, worse, aren’t qualified).

It’s hard to convince customers their AI agent won’t go crazy and charge them for outcomes that may not be useful.

Where will outcome-based pricing actually work?

I’m skeptical of outcome-based pricing as a “next big thing”, but not as a general concept. There will be places where it aligns customer-vendor incentives, lowers costs and increases margins.

But this requires a few circumstances:

You have all the data: You need to be able to measure the outcome in the first place, which most companies can’t do.

The outcome is easy to measure: A support ticket resolved is measurable, an HR person’s work output isn’t.

The outcome is qualitatively uniform: Qualified meetings are more or less qualified meetings. But a PR definitely isn’t a PR.

This restricts it from almost all positions that happen inside businesses. But if we want to unlock outcome-based as a new pricing strategy, we need to stop equating the roles AI agents might play with the roles humans play.

Outcome-based micro agents

The paid-by-the-PR engineer wouldn’t work. But how about a paid-by-the-fix agent that only fixes bugs? Companies are happy to pay people bug bounties, so there’s precedent that this would work.

You can imagine the same for CI/CD, building APIs and integrations, tests, etc.

We might not hire a human just to fix bugs, but we might have an agent for that. Many jobs do have a component where outcomes are easy to measure and more uniform.

If we want to unlock outcome-based pricing, we need to escape the confines of human jobs. In engineering terms, most human jobs are a monolith. They perform a bundle of activities that’s hard to untangle.

They work on features only they have context on, run marketing campaigns only they understand fully, operate HR departments, etc.

Outcome-based AI agents will be like microservices for the directly measurable outcomes of human work that expertly perform one aspect of the workflow, for which they get a commission.

Then, outcome-based pricing makes sense and creates better margins on AI products. For everything else, they’ll earn a salary, sorry, subscription.